CS444

Class 23 Preemption

Reading: Chap 5 to

pg. 332, 339-347, skip DMA, 348-360, Chap 10, Sec. 10.5: 771-773, 775-776,

--also 5.5.2 Clock

Software (pp 390-392)

Preemption occurs

when the scheduler switches processes when the current process is still runnable,

i.e. not blocked or exiting. This is done when the process has used up its

“quantum” of CPU time. It is basic to the handling of timesharing between

runnable processes, in real OS’s. But our hw3 system did not do preemption, so

we call its scheduler a non-preemptive scheduler. Real OS’s use preemptive

schedulers.

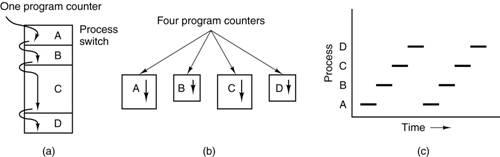

The basic

timesharing round-robin was first shown on pg. 85, case (c), as shown below,

but the discussion there did not mention preemption because it hadn’t been

defined yet in the book. It is just

called process switching, which of course is true, but not very specific. But it is preemption.

Process Switch Cases

--at blocking, due

to a blocking syscall or page fault

--at process exit

--at process

creation

--preemption

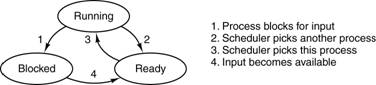

On pg. 90, we see

the classic process state diagram with the 4 transitions, as shown below. The

transition (2) from running to ready is actually preemption, though again the

discussion does not name it.

On pg. 148,

non-preemptive and preemptive schedulers are discussed, and the importance of

the tick interrupt handler in implementing preemption. The tick handler is what counts down the

quantum for a process, so should be the first to notice that a quantum has been

exhausted.

In fact, other

interrupt handlers can also cause rescheduling that results in preemption, but

it would work to just do it in the tick handler.

In the last class we

looked at the generic interrupt handler execution steps listed on pg. 349. This

list assumes a preemptive scheduler (i.e., a “real OS”), because it reschedules

processes from the interrupt handler. It

only does this, however, after finishing all the work for the interrupting

device. The scheduling work is done “on

the way back” from the interrupt.

The fact that all

the device-work is complete before rescheduling is done is important to

maintain the principle that interrupt handlers should run fast, and never block

on events. With rescheduling at the end,

the whole interrupt handler does not return until this process (the one interrupted)

is scheduled again, which could be many ms later.

However, the little

runt stack that remains from the interrupt handler execution at the scheduling

decision doesn’t matter to anything else on the system. It just stays there

until the process is scheduled again, then gets popped off.

The reason that all

serious OS’s have preemptive schedulers is that without preemption, user

processes can run arbitrarily long using the CPU, as long as they don’t use

any system calls. Thus the crucial capability is preemption of user execution,

not kernel execution. Thus in some OS’s,

there is a test in the interrupt handler before trying preemption, checking if

the interrupted execution was user level, and only trying preemption if

so. Linux and Windows have “fully

preemptive schedulers”, meaning that much kernel code can be preempted as well

as all user code. Some kernel code has

to be protected from preemption.

5.5.2 Clock Software

(pp 390-392)

The periodic interrupts from the timer are called ticks, and the interrupt handler is called the tick handler. The kernel uses it for: pg. 390

- timekeeping, incrementing the kernel-global system time variable

- counting down the quantum and causing preemption when it reaches zero

- accounting CPU usage

- handling alarm-wakeup system calls

- handling other time-delayed actions of the kernel such as network timeouts

- handling periodic housecleaning actions (garbage collection of various kinds)

Now the second point is crucial to hw4. More exactly, the quantum is the tick count left for this process to use before it gets preempted. We’ll add a new member of PEntry, p_quantum for it.

Then we have (first draft of code) at the end of the tick handler: Here QUANTUM should be 4, for 4 10-ms ticks.

if (--curproc->p_quantum == 0) {

/* preempting, later start

over with full quantum */

curproc->p_quantum

= QUANTUM;

debuglog(“%”); /* mark it in

debuglog */

schedule(); /* find

another process to run */

}

Note that we don’t need to preempt process 0, because it runs as long as no user processes are runnable. But schedule() will choose it again if necessary, so no special test for it is really needed.

Note that this code is part of an interrupt handler, so it

runs with IF=0, since we never use sti in our interrupt handlers (Linux does

use sti in interrupt handlers, to allow recursive interrupts.) Thus IF =

0 at the call to schedule(), as it requires.

Note that with this simple re-initialization of p_quantum, when schedule() runs, all the preempted processes have the same p_quantum value, so it isn’t useful to use p_quantum in the decision-making (which process to schedule next.) You can leave schedule() itself as before.

With this coding,

kernel preemption is happening

Note that we can’t tell whether a tick happened from user code execution or kernel code execution, unless we add an “in-kernel” flag. So we’re going to be preempting kernel execution (parts that are running with IF=1) as well as user execution. All user code runs with IF=1. That’s OK, we just have to be careful to do cli() where needed.

Is it bad to preempt kernel execution? No, it’s what Linux and (parts of) Windows does, in order to responsive. Consider process A running a long system call and process B becoming ready because of a disk-done interrupt. If kernel preemption is allowed, B can run before A finishes that system call, a good thing if B is more important than A.

Classic UNIX (pre-90s) disallowed preemption of kernel code, a practice known as “limited preemption.” Linux is now using unlimited preemption, but evolved from limited preemption (up to and including v. 2.4) . Linux 2.6 onwards is “fully preemptive”, meaning that that (a subset of) kernel code can be preempted.

What’s the downside of allowing kernel preemption? More forms of race conditions. Consider the line of code in hw3.soln, incrementing a kernel global variable:

number_of_zombie++;

Suppose this is implemented by two instructions, one to read the variable, and another to write the new value. Then suppose process 1 executed the first instruction and then got preempted. Process 2 executed both instructions, rereading the same value that process 1 saw (old) and writing old + 1. But when process 1 resumes, it also writes old + 1. One increment action got lost. This is called the lost update problem.

Solution? Use kernel mutex. For hw4, make IF=0 for this code. (Or verify that it is only one instruction, since interrupts occur strictly between instructions.)

But before you start worrying too much, note that this problem required a global variable, whereas most of the increments occur to local variables. Local variables have homes on the process stack, and each process has its own private stack. Thus local variables are process-private, and can’t suffer lost updates. It would be good to do an audit of updates to global variables in your hw5 kernel code to check for such problems.

Note that using curproc in an interrupt handler is unusual, not done in i/o (not timer) interrupt handlers. These execute as a “guest” in a “random” process image, and shouldn’t access anything about the current process. But the timer interrupt is being used to time the current process, so this access is just right.

Hw4 Preemption

(optional part for implementation, but be sure to understand it)

First, let’s look at the hw3 (no preemption case) output, and then see how it changes for hw4 with preemption. Note that one char takes about 1 ms to be output at 9600 baud, the baudrate of systems 1-10. Systems 11-14 have much slower processors, so not recommended for testing sched.

What we want to show here is that preemption improves performance of the system, by doing CPU-bound work in little spurts, allowing the other processes to queue up output when they get to run, and they get to run much more often.

Every time the outputting process runs, it fills the output queue, and these characters are typically output while a CPU-bound process is executing. You see that over milliseconds, the system is doing two things at once with one CPU: running a loop and outputting chars at interrupt level. That is happening even with a non-preemptive scheduler, but happens much more often with a preemptive scheduler.

For uprog[123].c, recall that the processes run like this, where ● denotes the computation loop:

Main 1 runs like this: aaaaaaaaaazzz●AAAAAAAAAA

main 2 runs like this: bbbbbbbbbbb

main 3 runs like this: ●ccccccccccc

For

more details, see the handout on Preemption

Using hw4 provided files: no

preemption

Actual output, except kprintf “SHUTTING DOWN”:

*aaaaaa**********cccccccc*ccaaaazz*z*********AAA*AAAAAAAbb*bb*bbbbbb*

Total

runtime: 26 stars, so 260 ms

Hw4 Execution with

preemption

Preemption of process 1 by process 2 is marked by %|(1-2) in the debug log. The tick occurs every 10ms. In the i/o bound case, the tick happens once every 10 chars, or possibly every 9 chars (allowing for some overhead.) We’ve settled on QUANTUM = 4, so at the 4th tick in a CPU-bound process (for example, process 1 of uprog123), preemption occurs. So a quantum lasts 40 ms.

The following is a run for this case. We can see an effect of preemption from watching the run: instead of the system stopping output, stuck in the computation loop in main1 or main3, it keeps doing output from the other processes while doing that computation.

Actual output, except kprintf “SHUTTING DOWN”:

*aaaaaa****aaaazz****z****bbbbbb**ccccc*cc***bbbbcc***AAAAA*AAAAAc*

In the preemptive case, output happens several times during the CPU bound execution. Since the 6 chars take 6 ms to output, and the loop in main1 runs 40 ms before being preempted, there are (about) 34 ms of CPU expended at the spots on the CPU-bound bar, just before the 2 preemptions, and another spot where the loop is finishing up.

What we’re seeing here is that preemption makes the system as a whole more responsive and more efficient, allowing more overlap between computing with the CPU and doing i/o.